At I/O, we don’t just announce a bunch of news, like new Gemini models, AI agents and Android updates — we let developers, reporters and partners experience some of that newness in action for the very first time through product demos.

This year, I was lucky enough to spend the day at Shoreline Amphitheatre, where I/O happens, and dive into all of the demos. Here’s the inside scoop on a few of them.

For my first demo of the day, I watched Gemini Advanced parse through a 20-plus page property lease, full of complicated legal phrasing and gotchas. I could then ask questions about the lease, like whether my landlord would allow me to have a pet dog, or whether I’d have to pay any extra fees. (I’m personally looking forward to being able to use the feature to decipher my next convoluted lease when my apartment is up for renewal.)

The next demo took things up a notch: Two Googlers fed Gemini the PDF of an entire economics textbook that was hundreds of pages long. It would have taken me hours to read the book, but Gemini was able to summarize and highlight important topics to study in seconds. It also made a multiple choice quiz — creating not just the single correct choice but also three incorrect answers to try and trip me up — to help me prepare for a theoretical upcoming test.

Googlers Sid Lall (left) and Adam Kurzrok (right) demonstrate how Gemini Advanced can now summarize a hefty economic textbook or thousands of pages of documents.

Googlers Sid Lall (left) and Adam Kurzrok (right) demonstrate how Gemini Advanced can now summarize a hefty economic textbook or thousands of pages of documents.

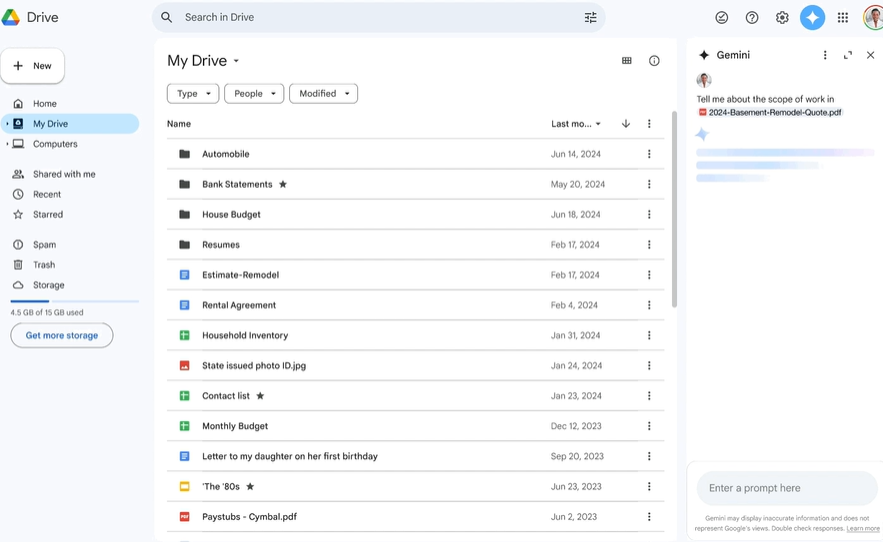

Both these demos took advantage of Gemini 1.5 Pro — which added the longest context window of any large-scale foundation model when we debuted it earlier this year. We’re opening up early Gemini 1.5 Pro access to Gemini Advanced subscribers and giving them the ability to upload documents to the tool right from Drive, so they can use Gemini to summarize or analyze documents up to 1,500 pages long.

Gemini 1.5 Pro is also coming to the side panel of Workspace apps like Gmail, Docs, Sheets, Slides, and Drive. To see this in action, I used Gemini in Gmail to summarize a sample email of a weekly school report, and pull out specific details like which activities were for 7th-grade students, or what the packing list for an overnight trip was.

Gemini’s side panel can help you answer key questions about your content in Gmail, Drive and more.

Gemini’s side panel can help you answer key questions about your content in Gmail, Drive and more.The improved long context window can even pull information from multiple documents when responding to a single prompt. In the side panel in Docs, I asked for help writing a sample letter to a potential job candidate — in the prompt I linked to the job description document and the applicant’s PDF portfolio, both of which were in my Drive — and instantly received a email draft, which factored in relevant details from both documents.

Gemini 1.5 Pro isn’t our only shiny new model, though: I also got to try the freshly-announced Imagen 3, our highest-quality text-to-image model yet. One of the new abilities I was excited about was its ability to generate decorative text and letters, so I put it through its paces. I started by asking for a stylized alphabet — like letters spelled out in jam on toast, or with silver balloons floating in the sky. Imagen 3 generated a full alphabet of letters, which I could then use to type out my own (delicious) menus.

After my Imagen 3 interlude, I continued with more Gemini demos. In one of them, I could pull up Gemini’s overlay on an Android phone and ask questions about anything on the screen. This really showed how we’re not only expanding what you can ask Gemini, but we’re also making Gemini context aware, so it can anticipate your needs and provide helpful suggestions.

The use case here was a lengthy oven manual. Whether it's a demo or real life, that's not something I'd be excited about reading. Instead of skimming through the document, I pulled up Gemini and immediately got an "Ask this PDF" suggestion. I tested questions like "how do I update the clock" and quickly got accurate answers. It worked just as well with YouTube videos. Instead of watching a 20-minute workout video, I asked a quick question about how to modify planks, got an answer, and was on my way onto the next demo, where I tested a new conversation mode called Gemini Live that lets you talk with Gemini in the app, no typing required.

Speaking with Gemini was a different experience than the traditional chatbot interface: Gemini’s answers are a lot more conversational than the paragraphs of texts and bullet-pointed lists you might usually get. In my demo, I learned you could even cut off Gemini in the middle of an answer. After asking for a list of kid’s activities for a summer vacation, I was able to interrupt a list of suggestions to dive in deeper on what materials I’d need for tie-dying a shirt.

The Project Astra — or “advanced seeing and talking responsive agent” — demo took things a step further to show the cutting edge of where our conversational AI projects are heading.

Our AI Sandbox, where developers and attendees tried out demos like Project Astra and other creative AI experiments, like MusicFX’s DJ Mode.

Our AI Sandbox, where developers and attendees tried out demos like Project Astra and other creative AI experiments, like MusicFX’s DJ Mode.Instead of only working with whatever is on your screen, or the information that you’ve typed into a chat box, Astra’s multimodal capabilities can understand conversational speech prompts and live video feeds at the same time to unlock new kinds of AI experiences.

Astra’s alliteration demo started out simply: I showed the camera — an overhead camera in this setup, but Astra can also use a phone camera or a camera on a wearable device — an object, like a banana or a piece of bread, and Gemini riffed on it with an alliterative sentence. I added more objects, and Gemini kept the conversation going, from “Bright bananas bask beautifully on the board” with a single fruit to “Culinary creations can catch the eye” when presented with a whole buffet board.

Astra alliterates with bananas, baguettes… and anything else you can show it.

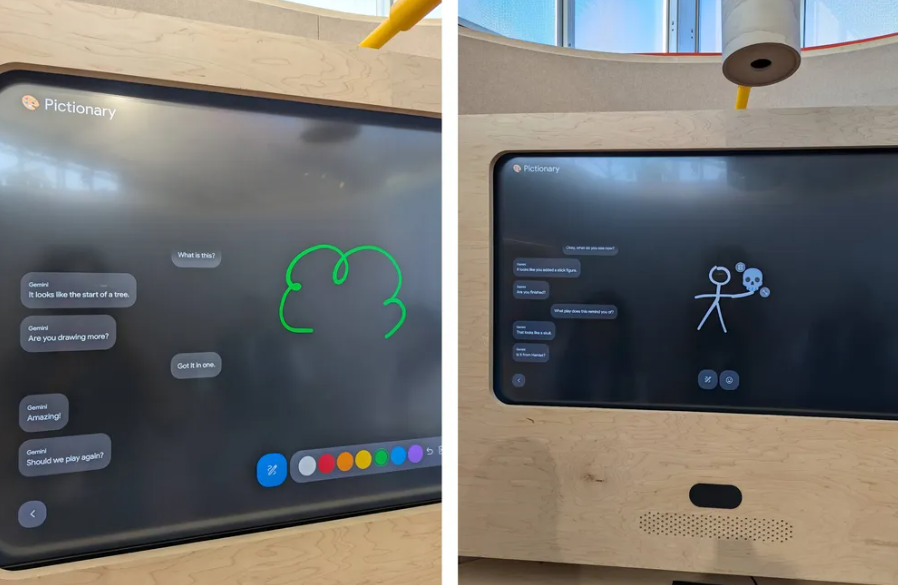

Astra alliterates with bananas, baguettes… and anything else you can show it.Another Astra demo let me play Pictionary with Gemini: a seemingly simple interaction, but one that required the agent to understand images, remember what had been drawn each round of the game and use general knowledge to actually guess what I was drawing. In one demo, Astra knew that a circle wasn’t enough to base a guess on, but as I added lines underneath it, it went quickly from ID-ing a stick figure to recognizing that a person holding up a skull emoji was Hamlet.

Astra is undefeated at Pictionary.

Astra is undefeated at Pictionary.Moving through the AI Sandbox and other demo stations felt like a glimpse into tomorrow. It was also humbling: Astra beat me at Pictionary in multiple rounds!